In my career I have both witnessed and have been part of many technical discussions and workshops surrounding the concept of open systems integration and the achievement of that with middleware.

If you haven’t dealt with integrating systems beyond a handful of nodes and data types, it’s quite easy to fall into the trap of middleware not needed. As complexity grows and the requirement to allow third parties (both known and unknown) to develop widgets or code for a system, the prospect of middleware becomes highly attractive.

In a perfect world everyone would use the same on the wire protocol, data formats and workflows for interacting with their widget or piece of software. In the early days of Defence systems, this was relatively straightforward as the Prime contractor and the Defence department generally sat in a room and decided how the system would interact, the Prime would then develop the wire protocol and full implementation. In those days the prospect of a random third party appearing on the scene, plugging in their Artificial Intelligence/Radio/Sensor and needing to conduct integration was unheard of and actively discouraged due to security, safety and a lack of a wider eco system.

This is no longer the reality of the world Defence systems exist in. Since Afghanistan and possibly earlier there have been increasing numbers of third-party system elements that have been integrated at pace in order to provide the capability required. Often these integrations have been conducted by Defence Departments away from any prime contractor involvement.

The UK recognised this and established the Generic Vehicle, Base and Soldier architecture standards. Seemingly ahead of its time, these standards sought to achieve the unification of standards to allow the rapid integration of new capability onto these three platform types. The UK MOD appeared to be ahead of the curve in establishing these standards, which included definitions of middleware, connectors and other aspects required to integrate systems. However, in practice there has been many birthing pains along the way, along with incompatible ‘open standards’ (Open DDS I’m looking at you) seeming power play from Prime contractors and a lack of openly available data/development environment for third party involvement. I won’t cover this in any detail here as this post is more about middleware and not a history of these standards.

Almost in parallel, in a research lab far, far away (well the USA at least!) a team was developing the Android Team Awareness Kit (ATAK) which is a command and control application that has benefitted from significant growth in terms of domestic and worldwide usage. Due to Android packaging problems along with a growing user base that wanted customisations, a plugin architecture was developed to allow third party tools and widgets to interact with ATAK. Whether knowingly or not ATAK suddenly became the middleware of choice for the soldier system and has allowed a growth in independent vendors and developers.

Now for those of us that live and work outside of the USA, this has been all fine and dandy up until needing to do any work with developing a plugin or interacting with the ATAK project. Admittedly the now open sourcing of CivTAK code base and the SDK has fixed this problem!

Still the requirement for translation layers and middleware exists as ATAK isn’t the only software in town and CoT isn’t the most open standard of message exchange (although the community at large is doing a grand job of reverse engineering and building interoperable software for it!)

This is where the role of middleware really comes in. In the wider world (outside of Defence), middleware comes in a variety of flavours, dependent on the end objective i.e. database, application, messaging protocol etc. This is where MQTT or Message Queuing Telemetry Transport starts to make an appearance in this discussion along with other standards such as RabbitMQ and Lean Services (more on that later).

The thoughts in this blog post do not exist in isolation, instead I am picking up on the multiple debates in the soldier system eco system about middleware. Currently there are several different international programmes trying to determine their own solution to the middleware questions. To name a few; NATO’s Generic Open Soldier System Reference Architecture (GOSSRA) initiative; Australia’s Generic Soldier Architecture; the UK’s Generic Soldier Architectures; New Zealand’s Soldier Modernisation; along with US DoD’s Hyper Enabled Operator, to name a few! A common debate at the core of these projects is the question of whether to adopt either MQTT, DDS, Lean Service Architecture or another middleware.

As described previously, these programmes want to adopt an open middleware that allows third party integration and development of functionality. The interest around MQTT messaging is in part what led me to write this blog post and a follow-up that implements MQTT in an ATAK plugin.

MQTT

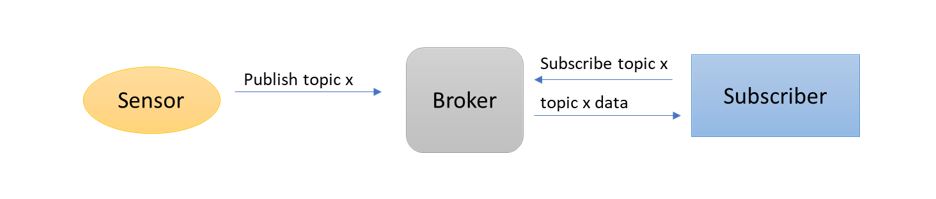

So MQTT, this is a transport mechanism that uses a publish and subscribe architecture using the notion of a broker in order to distribute messaging to various components of a system. Broadly clients subscribe to topics which contain information they need to operate or need to make decision upon. A topic could be designed around a specific item, data point or subject. For example, location information, platform telemetry etc.

The MQTT standard doesn’t describe several aspects of how it is to be implemented, namely serialisation and formats of the messages. These are up to the system implementor to design and build, which has its advantages!

If we are looking at ATAK with MQTT in mind for a middleware service, we come to decision point early in our design considerations – where do we put the broker? Given the primary aim of ATAK is to provide situational awareness around the battlefield, does this belong somewhere on the battlefield? The cloud? The current TAK server architecture could act as a possible pattern we could use, maybe a broker within a formation? Before we leap to a conclusion, let’s look at why we may want to use a middleware .. namely integrating other widgets and software systems, allowing functionality of the wider system to be added to or changed with relative ease! In that case do we want our new widget mounted on our personal weapon needing to talk to a MQTT broker that is relatively distant on the battlefield in order to update tracks on our ATAK instance?

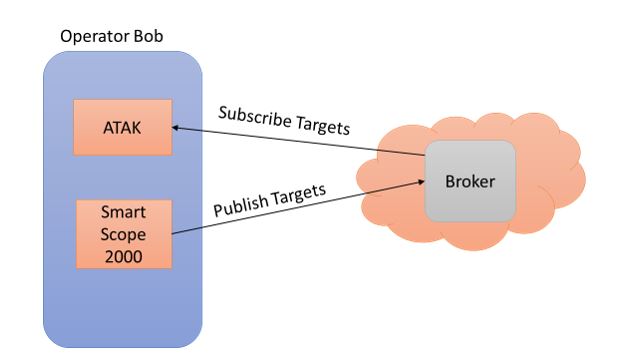

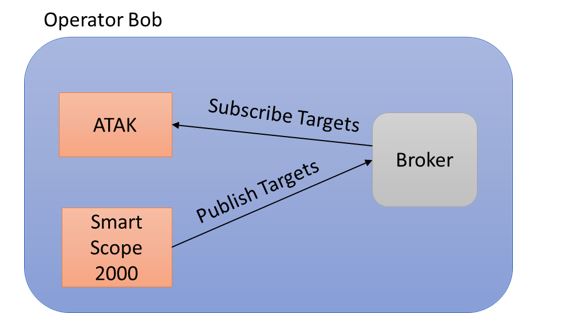

Mmm, probably not. That wouldn’t really make sense and would be very fragile given the nature of communications in the deployed environment. In fact, this might be a step backward from the current peer to peer architecture! Well hang on, what if we place the broker on Operator Bob?

This does look better; we can integrate our new widget and interchange data with ATAK, but is that the limit of our ambitions?

After all there may be new data we want to interchange to other people or things on the battlefield that ATAK doesn’t need or care to. We either need to implement Broker to Broker interfaces with a many to many relationship (gah, back to complexity) or implement another piece of middleware. We do know that Operator Bob doesn’t exist in isolation (he isn’t Chuck Norris) so he is going to want to exchange data with other people and systems. Middleware interfacing with middleware doesn’t sound like an ideal situation, in fact it almost feels like an anti-pattern in terms of maybe thinking too narrowly about the wider context our system exists within.

So, broker decisions aside we also need to consider that MQTT doesn’t specify how we construct our messages! This middleware only really describes the transport as opposed to specifying the protocol for interchange and how it could be packaged for sending ‘over the wire’. An easy thing to do would be to implement CoT over MQTT! We simply serialise the CoT or Protobuf from ATAK and then send that over a MQTT transport allowing additional systems to publish and subscribe, without needing to modify ATAK further than an MQTT plugin. Great for backward compatibility, but is this really the best method? We are not really using topics and CoT seems a bit backwards in terms of protocol format (come on, 2020’s and still XMLing?) Whilst we are at this we could possibly optimise for low bandwidth communications by applying compression and serialisation approaches.

There seems to be quite a bit here to consider, design and implement in order to achieve our aim. I have produced a working ATAK MQTT plugin as featured on my blog post here, along with publishing the source code. So have a play and see what you can come up with!

Lean Service Architecture

Now to circle back a little …

MQTT on the surface kind of looks a good fit but has some short comings that could be fixed with a little engineering and quite a bit of standardisation. Sounds like a research project! However, if we dive a little way back into the history of the UK’s Generic Soldier Architecture project a standard called Lean Service Architecture was published. This is an openly published standard whose aim was to provide a service orientated architecture, allowing machine to machine interaction within the tactical environment. Unfortunately, there are not any open implementations of this yet, but if we look at the standard it’s a remarkably good fit for what we are trying to achieve.

The standard as described are;

· No central servers available or that can be assumed to be accessible;

· Variable quality communications; low bandwidth, high latency, frequently interrupted;

· Large numbers of frequently changing participating systems and platforms.

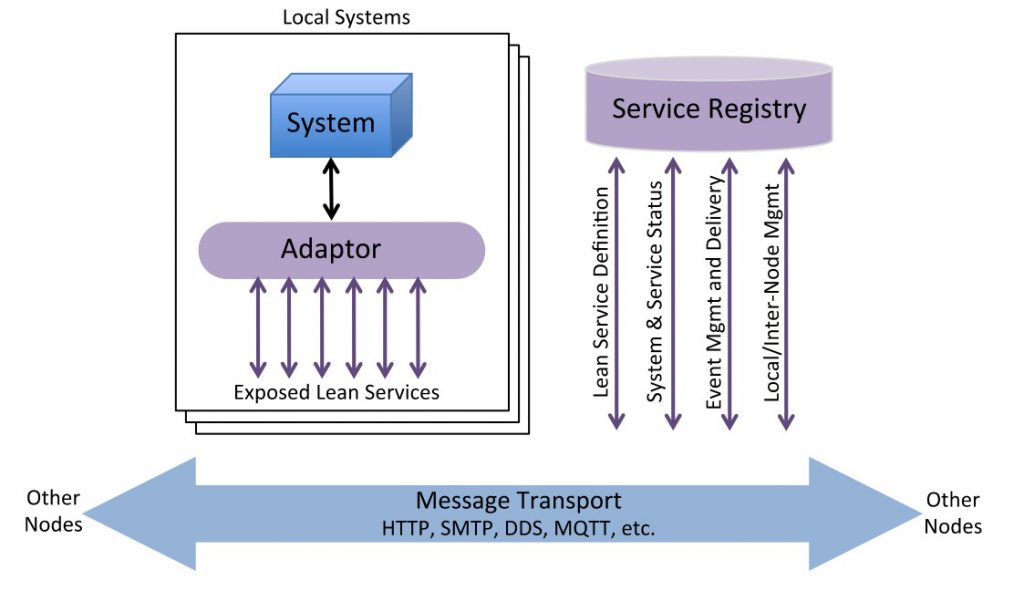

A LSA system is deployed with local adaptors (or native) subsystems talking to a local node, which includes a service registry (similar to the concept of the MQTT Broker), however where things get interesting is where LSA defines a bunch of messages and behaviours that must be used between nodes (effectively inter broker communications). These are Platform announcements, system status and service status updates. Seems like one of our major MQTT problems has been solved!

So, we can use a local Lean Service Node (Broker) and maintain node to node communications between various nodes on the battlefield without inventing a new process/protocol or implementing a new middleware just to serve that purpose!

The next problem was of message format and serialisation for over the wire transport. Fortunately, this has also been thought about; the LSA standard lays out the concept of mandated services for managing the middleware itself and Lean Service Messages for exchanging data. These messages and services are defined in JSON and serialised using an implementation of the open source Apache Avro serialisation system. In using Avro we have the concept of a message schema, which defines the message format and is used by the Avro serialisation library to serialise and compress the message for on the wire transmission. The benefit of Avro, is that it produces compact binary files that can fit over a small tactical radio links (personally I have seen this down to 10’s of Kbits) the big benefit of Avro over say Protobuf, is that we do not need to pre-compile the proto definitions. We can almost dynamically add new schemas to expand our message and service set as systems develop.

One further benefit is the introduction of namespaces and versioning. With LSA we could have messages defined in different namespaces and with the concept of version numbers within schemas we can evolve our message definitions over time with the system checking for forward and reverse compatibility.

{

"type" : "lsbase",

"version" : "1.0",

"namespace" : "ls.messages.base",

"name" : "lscall",

"parameters":

[

{"servicefullname" : "string"},

{"type": "enum", "symbols" : ["EVENT", "REQUEST", "RESPONSE",

"ERROR"]},

{"callcontext" : "string"},

{"parameters" : dataparameters}

]

}In LSA we have 2 potential ways we can interact with the middleware – a CALL and an EVENT. A CALL is a request/response (for example, ‘what is your battery status Node 1’) and an EVENT is a one-way broadcast (for example, ‘here is my location’). This gives flexibility for us to think about how our messages translate into services. Let’s not simply think of position as an atomic message, but how would we consume, processes and distribute that with a wider system?

Summary

This is probably the longest post I have published so far and is more of a traditional ‘White paper’ style rather than a how to surrounding Cyber or ATAK. However with the growing interest in middleware systems I wanted to push some of the discussions out into the open. Is there a single answer to what is the best middleware? Unfortunately not due to the question of boundary. If we consider a soldier or node in isolation a middleware such as MQTT could make sense but also appears to be overkill for a service that software such as ATAK achieves through a plugin adaptor architecture. If we consider how soldiers or nodes may interact with the wider battlespace or between themselves and autonomous systems, middleware makes absolute sense and there is a strong argument for the use of Lean Services Architecture.

Unfortunately, it appears the UK MOD has developed, tested and published a standard but has failed to nurture it. In the era of UK prosperity and supporting UK Small and Medium Enterprises (SME’s) it seems short sighted to have apparently abandoned a UK capability. Surely there should be money somewhere in the system to support a community edition to help the global adoption and use of the standard?